Project information

- Name: NeRF- Neural Radiance Field 3D Reconstruction

- Course: CIS580 Machine Perception

- Instructor: Prof. Kostas Danidillis

- Project date: Nov-Dec 2023

- Project URL: Github

About

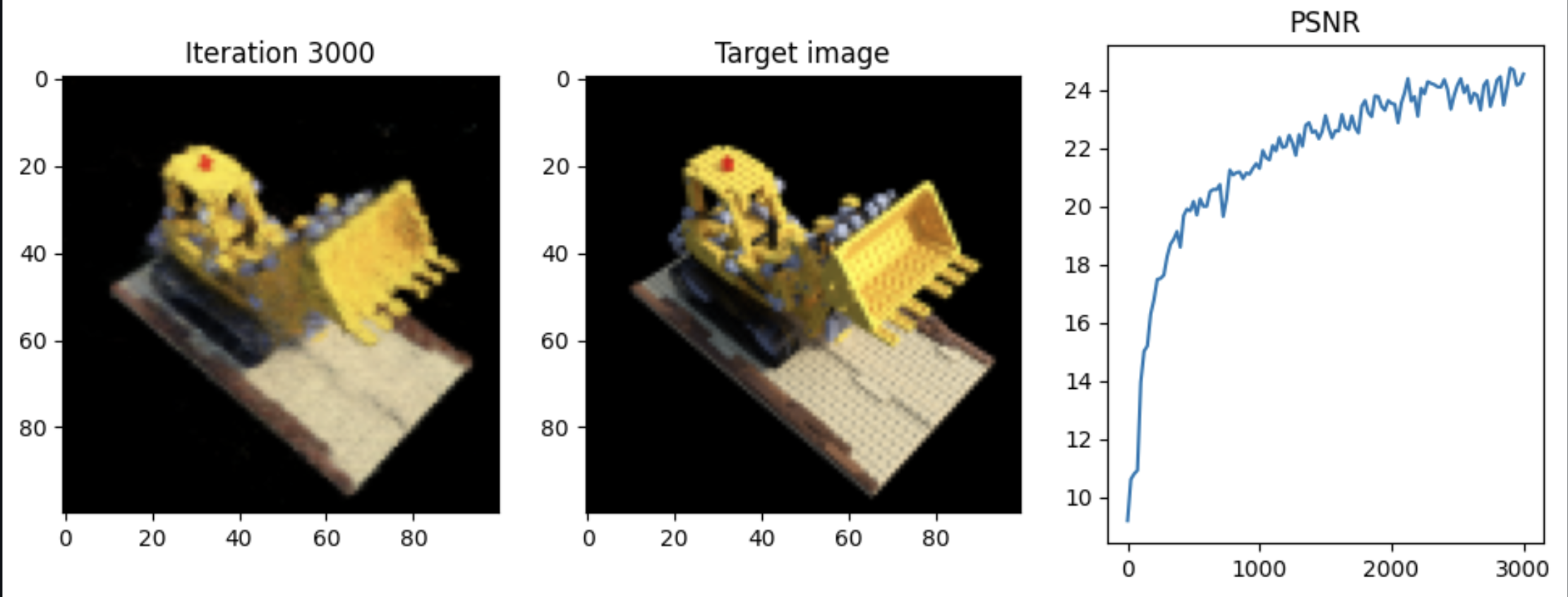

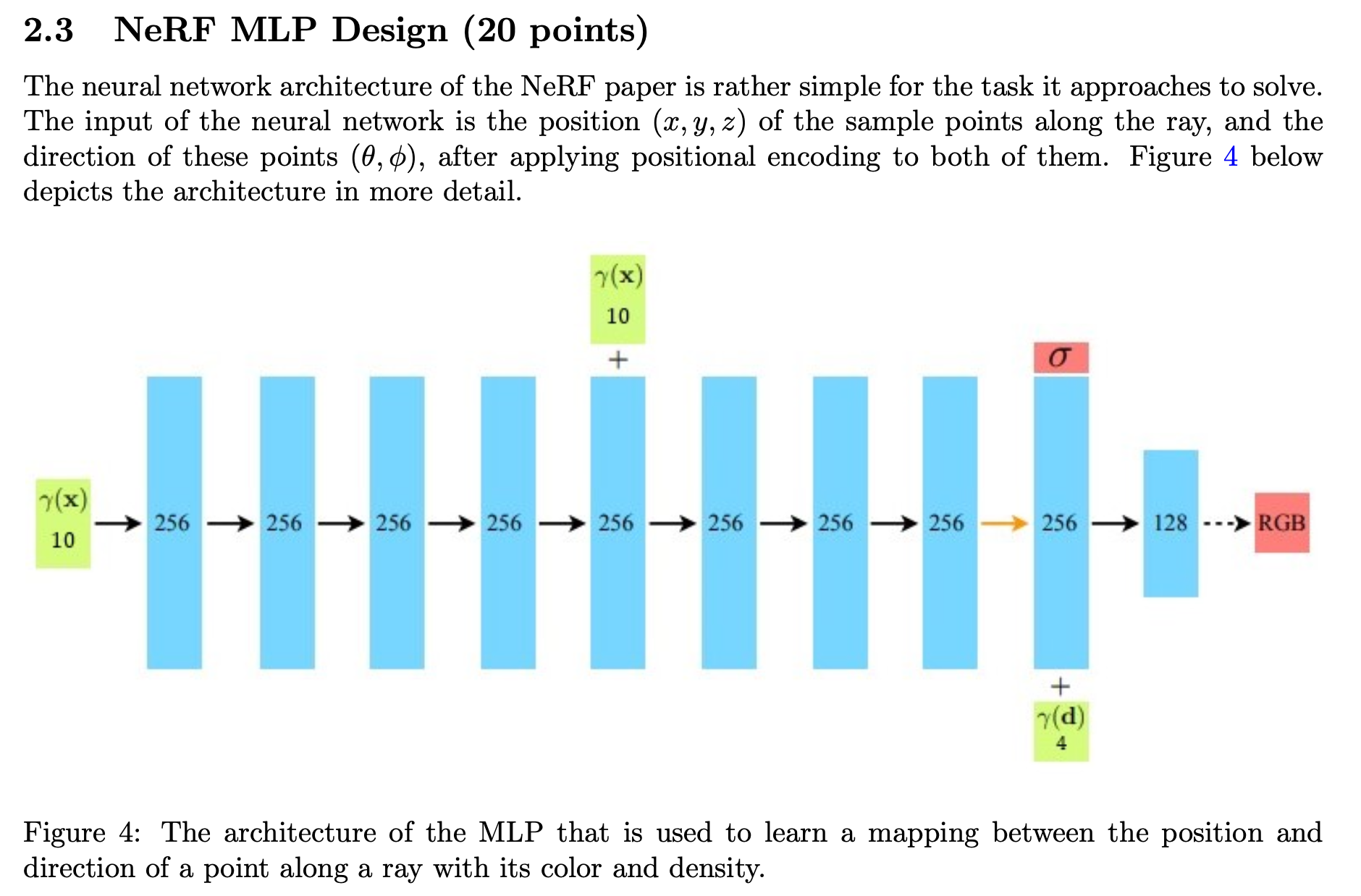

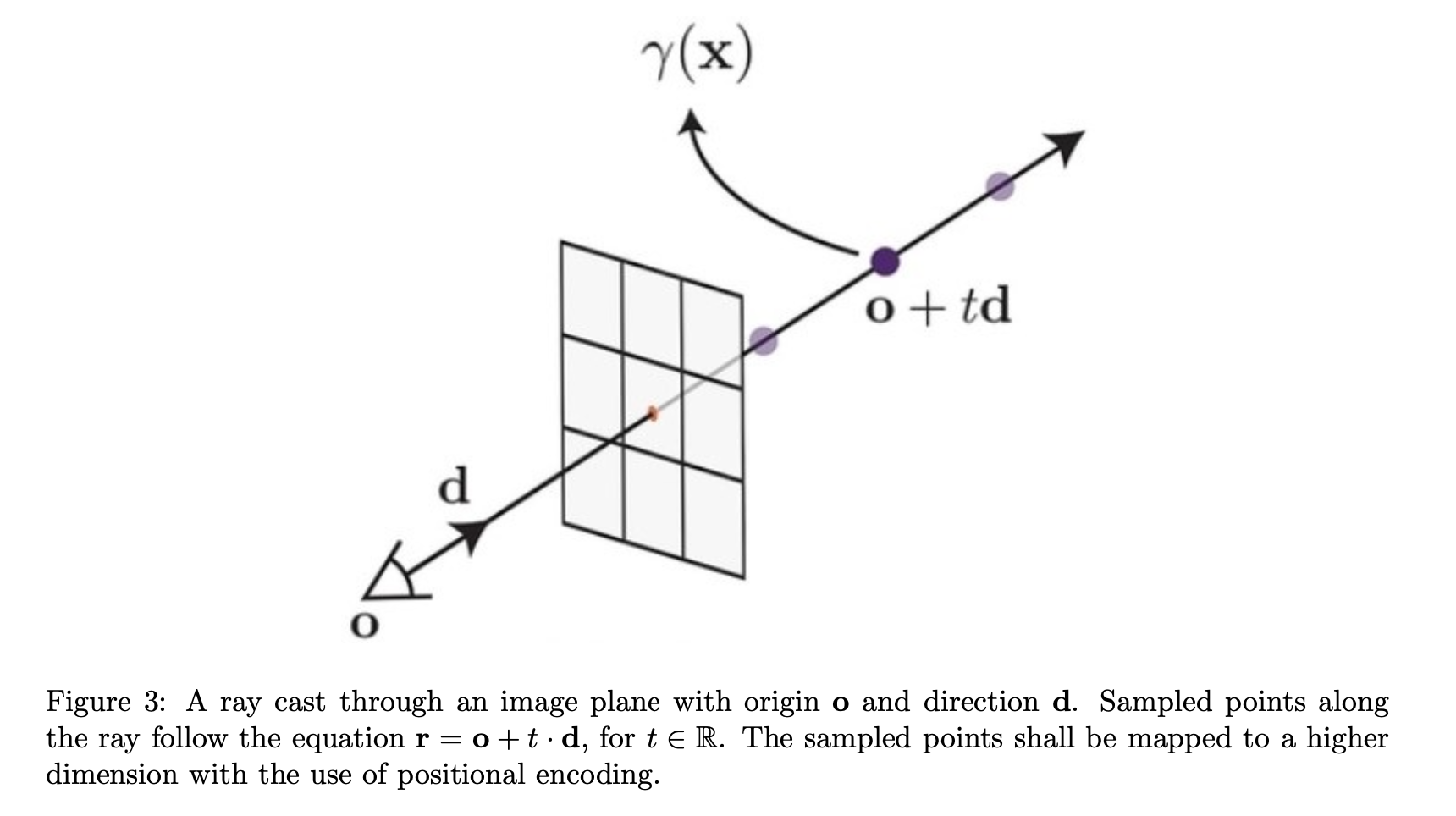

The Neural Radiance Fields (NeRF) project focused on novel view synthesis of complex 3D scenes using advanced computational methods. Initially, it involved fitting a 2D image using a Multilayer Perceptron (MLP), employing positional encoding to enhance the mapping from pixel coordinates to RGB colors. The core part featured representing a 3D scene as a set of points, each defined by color and density, and designing an MLP to learn the mapping between the position/direction of these points and their attributes. A crucial aspect was volume rendering, using a volumetric rendering formula to approximate pixel color from the color and density of sampled points along a ray. Rendering an image required calculating rays' origins and directions, sampling points along these rays, passing them through the NeRF MLP, and then applying the volumetric rendering equation. The final stage entailed training the NeRF model and reconstructing novel scene views, aiming for high Peak Signal-to-Noise Ratio (PSNR) and showcasing the intricacies of neural network design, volumetric rendering, and handling complex 3D data.